CentOS 组建Raid5

前言

买了个USB硬盘柜,本身不支持RAID,想做RAID用。

试了windows11:做存储池时一拷东西就掉盘

WindowsServer 2016:无法做存储池(硬盘柜里的所有硬盘序列号都一样,磁盘管理里也无法转换动态分区)

黑群晖:稳定性不是很好

最后决定使用linux做个Raid,最后samba挂到其他地方用。

设备

N3540的矿难小主机(主要性能需求不大,自己有个闲置的,而且功耗特别低,不用风扇也不热)

ORICO硬盘柜(闲鱼淘的二手,300块五盘位)

五块硬盘(选了6T监控盘,功耗小点,自己对速度需求也不大,而且都走USB了也没啥速度)

组建Raid5

CentOS安装好后查看硬盘状态

[root@localhost ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 238.5G 0 disk

├─sda1 8:1 0 200M 0 part /boot/efi

├─sda2 8:2 0 1G 0 part /boot

└─sda3 8:3 0 237.3G 0 part

├─centos-root 253:0 0 50G 0 lvm /

├─centos-swap 253:1 0 7.8G 0 lvm [SWAP]

└─centos-home 253:2 0 179.5G 0 lvm /home

sdb 8:16 0 5.5T 0 disk

sdc 8:32 0 5.5T 0 disk

sdd 8:48 0 5.5T 0 disk

sde 8:64 0 5.5T 0 disk

sdf 8:80 0 5.5T 0 disk

五块盘都认出来了,sdb-sdf,容量也正确

使用mdadm创建raid

[root@localhost ~]# sudo mdadm --create --verbose /dev/md0 --level=5 --raid-devices=5 /dev/sdb /dev/sdc /dev/sdd /dev/sde /dev/sdf

mdadm: layout defaults to left-symmetric

mdadm: layout defaults to left-symmetric

mdadm: chunk size defaults to 512K

mdadm: partition table exists on /dev/sdb

mdadm: partition table exists on /dev/sdb but will be lost or

meaningless after creating array

mdadm: partition table exists on /dev/sdc

mdadm: partition table exists on /dev/sdc but will be lost or

meaningless after creating array

mdadm: partition table exists on /dev/sdd

mdadm: partition table exists on /dev/sdd but will be lost or

meaningless after creating array

mdadm: partition table exists on /dev/sde

mdadm: partition table exists on /dev/sde but will be lost or

meaningless after creating array

mdadm: partition table exists on /dev/sdf

mdadm: partition table exists on /dev/sdf but will be lost or

meaningless after creating array

mdadm: size set to 5860390400K

mdadm: automatically enabling write-intent bitmap on large array

Continue creating array?

Continue creating array? (y/n) y

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md0 started.查看RAID状态

[root@localhost ~]# sudo mdadm --detail /dev/md0

/dev/md0:

Version : 1.2

Creation Time : Fri Jul 5 07:27:37 2024

Raid Level : raid5

Array Size : 23441561600 (22355.62 GiB 24004.16 GB)

Used Dev Size : 5860390400 (5588.90 GiB 6001.04 GB)

Raid Devices : 5

Total Devices : 5

Persistence : Superblock is persistent

Intent Bitmap : Internal

Update Time : Fri Jul 5 07:27:43 2024

State : clean, degraded, recovering

Active Devices : 4

Working Devices : 5

Failed Devices : 0

Spare Devices : 1

Layout : left-symmetric

Chunk Size : 512K

Consistency Policy : bitmap

Rebuild Status : 0% complete

Name : localhost.localdomain:0 (local to host localhost.localdomain)

UUID : 3302b9f5:f38071b2:dc13cf9b:5356f797

Events : 2

Number Major Minor RaidDevice State

0 8 16 0 active sync /dev/sdb

1 8 32 1 active sync /dev/sdc

2 8 48 2 active sync /dev/sdd

3 8 64 3 active sync /dev/sde

5 8 80 4 spare rebuilding /dev/sdf格式化

[root@localhost ~]# sudo mkfs.ext4 /dev/md0

mke2fs 1.42.9 (28-Dec-2013)

文件系统标签=

OS type: Linux

块大小=4096 (log=2)

分块大小=4096 (log=2)

Stride=128 blocks, Stripe width=512 blocks

366274560 inodes, 5860390400 blocks

293019520 blocks (5.00%) reserved for the super user

第一个数据块=0

178845 block groups

32768 blocks per group, 32768 fragments per group

2048 inodes per group

Superblock backups stored on blocks:

32768, 98304, 163840, 229376, 294912, 819200, 884736, 1605632, 2654208,

4096000, 7962624, 11239424, 20480000, 23887872, 71663616, 78675968,

102400000, 214990848, 512000000, 550731776, 644972544, 1934917632,

2560000000, 3855122432, 5804752896

Allocating group tables: 完成

正在写入inode表: 完成

Creating journal (32768 blocks): 完成

Writing superblocks and filesystem accounting information:

完成

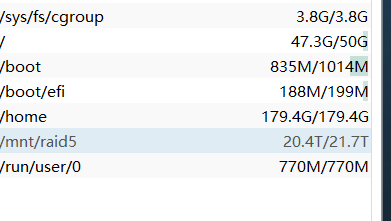

格式化完之后就可以挂载了,创建/mnt/raid5目录,将md0挂在里面

[root@localhost ~]# sudo mkdir /mnt/raid5

[root@localhost ~]# sudo mount /dev/md0 /mnt/raid5这时候可以测试存放数据,测试完成后需要将虚拟磁盘添加到fstab开机挂载

查看UUID

[root@localhost ~]# sudo blkid /dev/md0

/dev/md0: UUID="7af86e80-718d-4cc5-8556-a0c58c6bb760" TYPE="ext4" 记好UUID后编辑/etc/fstab,添加一行

UUID=7af86e80-718d-4cc5-8556-a0c58c6bb760 /mnt/raid5 ext4 defaults 0 2保存RAID记录

[root@localhost ~]# sudo mdadm --detail --scan | sudo tee -a /etc/mdadm.conf

ARRAY /dev/md0 metadata=1.2 spares=1 name=localhost.localdomain:0 UUID=3302b9f5:f38071b2:dc13cf9b:5356f797速度测试

[root@localhost raid5]# dd if=/dev/zero of=/mnt/raid5/test bs=1M count=1024

记录了1024+0 的读入

记录了1024+0 的写出

1073741824字节(1.1 GB)已复制,2.93325 秒,366 MB/秒