CentOS Raid5故障导致进入救援模式处理

前言

服务器断电后Raid故障,导致开机进入紧急救援模式,相关服务无法启动

处理过程

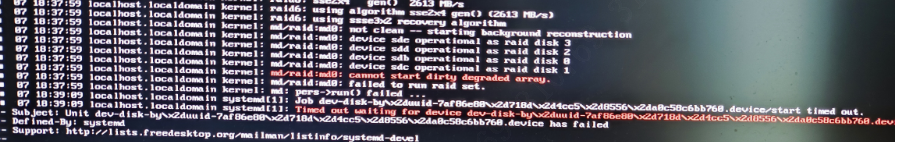

journalctl -xb 查看日志

journalctl -xb取消raid开机挂载

修改/etc/fstab 取消raid挂载,这样就可以重启进入系统了

[root@192 ~]# cat /etc/fstab

#

# /etc/fstab

# Created by anaconda on Fri Jul 5 06:41:45 2024

#

# Accessible filesystems, by reference, are maintained under '/dev/disk'

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info

#

/dev/mapper/centos-root / xfs defaults 0 0

UUID=1d3a5601-d085-4d87-b418-63e9d48aa2e4 /boot xfs defaults 0 0

#UUID=7af86e80-718d-4cc5-8556-a0c58c6bb760 /mnt/raid5 ext4 defaults 0 2

UUID=525E-341C /boot/efi vfat umask=0077,shortname=winnt 0 0

/dev/mapper/centos-home /home xfs defaults 0 0

/dev/mapper/centos-swap swap swap defaults 0 0

[root@192 ~]# 直接在fstab里注释掉raid盘,然后保存重启

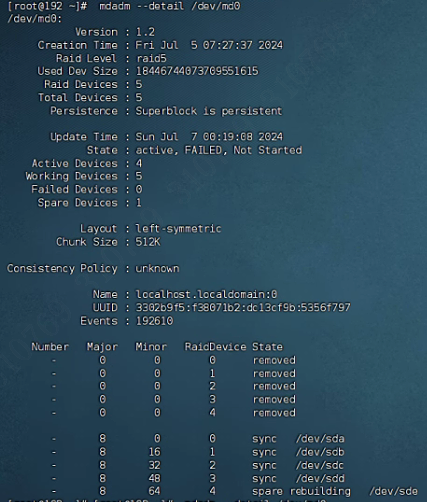

查看raid状态

[root@192 ~]# mdadm --detail /dev/md0

/dev/md0:

Version : 1.2

Creation Time : Fri Jul 5 07:27:37 2024

Raid Level : raid5

Used Dev Size : 18446744073709551615

Raid Devices : 5

Total Devices : 5

Persistence : Superblock is persistent

Update Time : Sun Jul 7 00:19:08 2024

State : active, FAILED, Not Started

Active Devices : 4

Working Devices : 5

Failed Devices : 0

Spare Devices : 1

Layout : left-symmetric

Chunk Size : 512K

Consistency Policy : unknown

Name : localhost.localdomain:0

UUID : 3302b9f5:f38071b2:dc13cf9b:5356f797

Events : 192610

Number Major Minor RaidDevice State

- 0 0 0 removed

- 0 0 1 removed

- 0 0 2 removed

- 0 0 3 removed

- 0 0 4 removed

- 8 0 0 sync /dev/sda

- 8 16 1 sync /dev/sdb

- 8 32 2 sync /dev/sdc

- 8 48 3 sync /dev/sdd

- 8 64 4 spare rebuilding /dev/sderaid处在faild状态

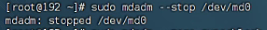

暂停raid

[root@192 ~]# sudo mdadm --stop /dev/md0

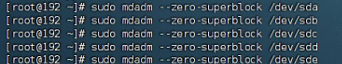

mdadm: stopped /dev/md0清空0字节块

[root@192 ~]# sudo mdadm --zero-superblock /dev/sda

[root@192 ~]# sudo mdadm --zero-superblock /dev/sdb

[root@192 ~]# sudo mdadm --zero-superblock /dev/sdc

[root@192 ~]# sudo mdadm --zero-superblock /dev/sdd

[root@192 ~]# sudo mdadm --zero-superblock /dev/sde重建raid

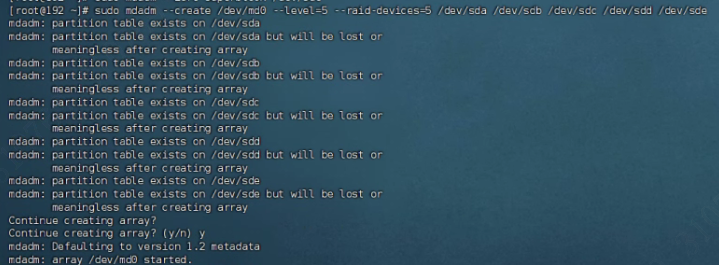

[root@192 ~]# sudo mdadm --create /dev/md0 --level=5 --raid-devices=5 /dev/sda /dev/sdb /dev/sdc /dev/sdd /dev/sde

mdadm: partition table exists on /dev/sda

mdadm: partition table exists on /dev/sda but will be lost or

meaningless after creating array

mdadm: partition table exists on /dev/sdb

mdadm: partition table exists on /dev/sdb but will be lost or

meaningless after creating array

mdadm: partition table exists on /dev/sdc

mdadm: partition table exists on /dev/sdc but will be lost or

meaningless after creating array

mdadm: partition table exists on /dev/sdd

mdadm: partition table exists on /dev/sdd but will be lost or

meaningless after creating array

mdadm: partition table exists on /dev/sde

mdadm: partition table exists on /dev/sde but will be lost or

meaningless after creating array

Continue creating array?

Continue creating array? (y/n) y

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md0 started.查看raid状态

[root@192 ~]# sudo mdadm --detail /dev/md0

/dev/md0:

Version : 1.2

Creation Time : Sun Jul 7 19:01:16 2024

Raid Level : raid5

Array Size : 23441561600 (21.83 TiB 24.00 TB)

Used Dev Size : 5860390400 (5.46 TiB 6.00 TB)

Raid Devices : 5

Total Devices : 5

Persistence : Superblock is persistent

Intent Bitmap : Internal

Update Time : Sun Jul 7 19:01:21 2024

State : clean, degraded, recovering

Active Devices : 4

Working Devices : 5

Failed Devices : 0

Spare Devices : 1

Layout : left-symmetric

Chunk Size : 512K

Consistency Policy : bitmap

Rebuild Status : 0% complete

Name : 192.168.10.240:0 (local to host 192.168.10.240)

UUID : cf4c90df:b1b909d8:ef892cd0:537f8e83

Events : 2

Number Major Minor RaidDevice State

0 8 0 0 active sync /dev/sda

1 8 16 1 active sync /dev/sdb

2 8 32 2 active sync /dev/sdc

3 8 48 3 active sync /dev/sdd

5 8 64 4 spare rebuilding /dev/sde